You can find the first article of the series here: Crazy DevOps interview questions.

Question 1:

Suppose you run the following commands:

# cd /tmp # mkdir a # cd a # mkdir b # cd b # ln /tmp/a a

… what is the result?

At this point one may point out that the hardlink being defined may basically create a circular reference, which is a correct answer on its own. It’s not complete, though: how would the operating system (the file system) handle such command, anyway?

A command line guru may simply dismiss the question saying that hardlinks are not allowed for directories and that’s about it. Another guru may point out that we’re missing the -d parameter to ln and the command will fail before anything else considered. Correct, but still not the complete answer expected by the interviewer.

The complete answer must point out that:

-

Not all file systems disallow directory hardlinks (most do). The notable exception is HFS+ (Apple OS/X).

-

The hard links are, by definition, multiple directory entries pointing to a single inode. There is a “hardlink counter” field within the inode. Deleting a hard link will not delete the file unless that counter is 1.

-

Directory hard links are not by definition dangerous to be disallowed by default. The major problem with them is the circular reference situation described above. This can be solved by using graph theory but such implementation is both cpu and memory intensive.

-

The decision to disallow hard links for directories was taken with this computation cost in mind. Such computation cost grows with the file system size.

I agree to you that a comprehensive answer is usually expected in an interview setting by a company within the “Big Four” technology companies.

Every large deployment has the odd Windows node; well, not every deployment, as many places have Windows-only environments. The reason is quite simple: most closed-source SDKs do not run on anything but Windows, so one needs to write a Windows service to access those functionalities. Fortunately for command-line gurus, Microsoft has created an extraordinary toolset, the PowerShell.

When coding something in PowerShell, one must remember 2 things:

-

The script files should end in .ps1 rather than .bat (and one must usually override a security setting to get the system to run them);

-

Most tasks are one-liners.

Coming from Linux scripting some things may look odd (e.g. it is not possible to directly move directories, one must copy them recursively and then delete the source) but in the end the job gets done. I have put below a few basic tasks that one may at some point need to perform.

1. Getting a zip file from some http server and unzip it:

$source_file = "http://internal.repo/package/module.zip" $tmp_folder = "C\Temp" $dest_folder = "C:\Program Files\Internal\Application" $source_file_name = [io.path]::GetFileNameWithoutExtension($source_file) $dest_zip = "$tmp_folder\$source_file_name" New-Item -ItemType Directory -Force -Path $temp_folder Invoke-WebRequest $source_file -OutFile $dest_zip New-Item -ItemType Directory -Force -Path $dest_folder Add-Type -AssemblyName System.IO.Compression.FileSystem [System.IO.Compression.ZipFile]::ExtractToDirectory($dest_zip, $dest_folder) Remove-Item -Path "$dest_zip" -Force ### No -Force for the temp folder Remove-Item -Path "$tmp_folder"

This text is about doing backups for data already existing in AWS, not for outside data, although some methods apply for both cases. But let’s start from the beginning:

What Data?

Your data can be located on EC2 nodes (virtual servers) or you may be using some dedicated database service such as RDS. The dedicated services have the backup functionality built-in already, with settings easily accessible through the interface. I won’t deal with those but rather with the “raw” data you may have on a node.

The data on the node falls in 2 categories, or can be looked over from 2 different perspectives:

-

When one wants to capture the “system state” at a certain point in time. This perspective does not consider the data composition, but the functionality that is being captured for use at a later date as a known good fallback point.

-

When one wants to get the state of a specific subsystem (e.g. a subset of the local storage, a subset of the local database). This is the “classical backup” as it is widely known.

Capturing State

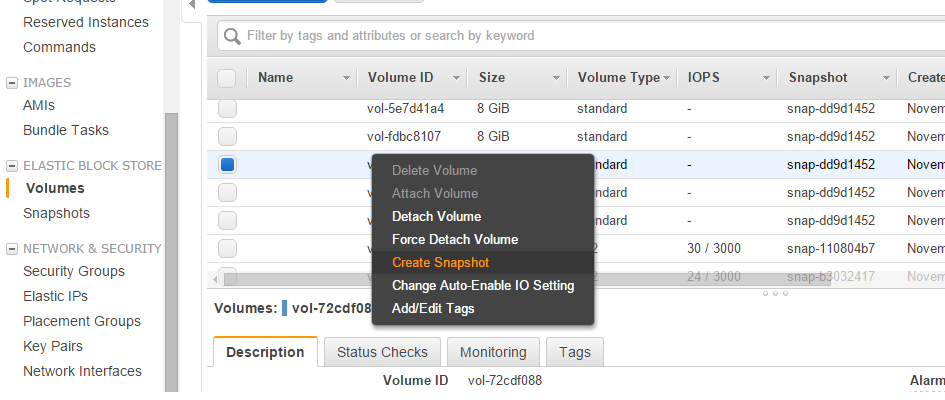

AWS offers full support for taking snapshots of volumes:

One does not need to only use the interface; all the functionalities are available programatically. One may also want to look over Boto Python library.

Classical Backup

One can store files through programatical means (e.g. from cron-based scripts to full fledged backup software that runs on a schedule) in the Amazon Cloud to the following services:

-

Simple Storage Service (S3): this is the easiest to use as it offers instant storage, instant retrieval and also versioning (e.g. you may mirror some directory contents on the secure storage at various points in time). It is not a cost effective method of storage for huge amounts of data (multiple terabytes) over long periods of time.

-

Glacier: this is the equivalent of the tape storage. The retrieval is not instant (one must schedule such retrieval in advance). It does not support versioning by default. It is 3-4 times cheaper than S3, though.

-

A dedicated EC2 node (or multiple nodes organized as a backup storage cluster): this is not cost effective but may work in certain scenarios (e.g. live data mirroring).

-

A dedicated database in RDS: this is far from cost effective but is the solution if one wants to use some existing backup software that can store data to a database only.

That was my introduction on doing backups in AWS. Thank you for your read!