AWS provides a complete monitoring engine called CloudWatch. This works with metrics, including custom, user-provided metrics and it’s able to raise alarms when any such metric crosses a certain threshold. This is the only tool used for perfomance monitoring tasks within AWS.

This text will cover a monitoring scenario regarding deploying an arbitrary application to the “Cloud” and then being able to determine what causes performance limiting, be it in the application code itself or coming from limits enforced by Amazon.

Scenario

Let’s assume that you have just started using Amazon Web Services and are deploying applications on this free tier or by using general purpose (T2) instances. You quickly learn that the general purpose instances work with “credits” that allow dealing with short load spikes through performance bursting, but when these credits are exhausted, instance performance is reverted to some baseline. These particular details do not make a lot of sense, but you need to know if the application can meet the desired service targets while sticking to this setup.

Prerequisites

If you are responsible with making sure that service targets are to be met or exceeded, you need to be in control of the Cloud environment where the service is deployed. Any of the following are red flags:

-

Not being given full access to AWS Console in order to be able to experiment with instance and storage volume types.

-

Not being given access to monitoring engine (CloudWatch).

If you are only given a server instance in some “Cloud” and are told to deploy this application and then meet some service targets, you should just call it quits. Yes, do run, you are being set for failure, through no fault of your own, other than trying to make it work.

Internal Monitoring?

AWS does not offer internal monitoring, in the sense of getting hypervisor metrics from within the instance itself. This is the standard industry practice: all monitoring should be external. From within the instance itself, resource caps being enforced will only be experienced as general slowness or through timeouts.

Note: you can retrieve static data from within the instance itself, so-called meta data:

[root@ec2instance ~]# curl http://169.254.169.254/latest/meta-data/ ami-id ami-launch-index ami-manifest-path block-device-mapping/ hostname . . .

Monitoring with CloudWatch

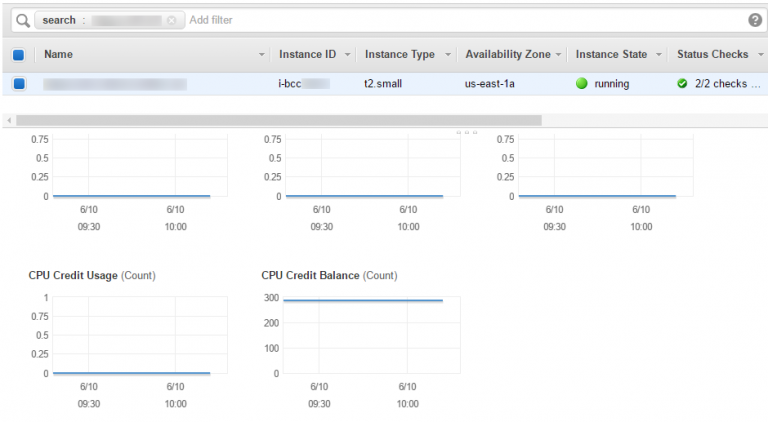

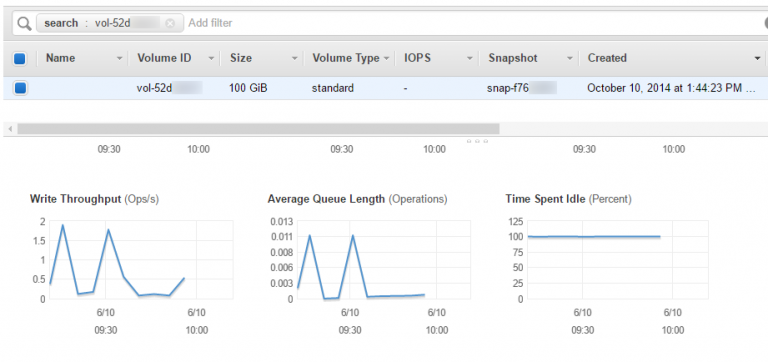

For every instance or volume, there should be a tab within the console containing a couple of graphs with metrics generated by instance hypervisor: CPU utilization, network traffic, I/O statistics. By default, none of these reflect anything related to the operating system, like memory or disk usage, so you have to define custom metrics for these. Amazon provides tools to help out with the task (e.g. Amazon CloudWatch Monitoring Scripts for Linux).

In the scenario mentioned above, with the application running on a general purpose, performance capped instance, you must pay attention to the following metrics in CloudWatch:

-

On the instance listing: CPU Credit Usage and CPU Credit Balance. This credit usage is a prime indicator for computing spikes, while an exhausted balance event indicates that computing performance was throttled by hypervisor.

A constant CPU Credit zero balance means that a compute-intensive application was placed on the wrong instance type for the expected load, but it can also pinpoint issues with the application itself. On a side note, this behavior can easily be replicated by deploying a cryptocurrency miner or through a fork bomb.

-

On the volume listing: Average Queue Length. If this value does not go to 0 over extended periods of time, this means that read or write operations on the volume routinely exceed the IOPS limits enforced by Amazon. Additional data can be found with Read Throughput and Write Throughput metrics.

Certain usage scenarios (like web servers) are better suited for instances with more memory, so excessive reads can be alleviated through caching. Excessive writes can sometimes be attributed to misconfigured logging, so adjusting settings may help reduce the amount of data being written to the storage volume. If none of these apply, the solution is to maybe deploy larger volumes (this IOPS quota depends on the volume size for gp2 volume types) or work with “provisioned IOPS” (io1) volumes.

Note 1: the CPU credit system only applies to “T” instance types (general purpose). Other instance types provide their nominal performance at all times.

Note 2: IOPS enforcements apply to EBS (SSD) volumes only. Some instance types come with additional local storage that provide performance closer to the hardware limits. Newer hard drive storage types (st1, sc1) are throughput-limited rather than operation-limited.

Advanced Monitoring

You can get CloudWatch data through AWS CLI tools. This is useful for any advanced data analysis (e.g. looking over certain periods). I will not cover the installation of these tools on the local machine; you need a key pair generated in order to work with them.

On the monitoring side, the series of steps could be (Example):

1. Figure out instance metadata:

Note: this can easily be retrieved from AWS console.

If you know the name of the instance and the tag was properly set:

$ aws ec2 describe-instances --filter Name=tag:Name,Values=myinstance.local

If you know the instance id:

$ aws ec2 describe-instances --instance-ids i-abcd1234

Out of the large json data block that is returned, the following 2 pieces of information should be identified before proceeding:

-

The instance id;

-

The volume id (or ids, if there are more).

2. Check the CPU credit balance:

$ aws cloudwatch get-metric-statistics --metric-name CPUCreditBalance --start-time 2016-06-05T23:18:00 --end-time 2016-06-06T23:18:00 --period 3600 --namespace AWS/EC2 --statistics Maximum --dimensions Name=InstanceId,Value=i-abcd1234

{

'Datapoints': [

{

'Timestamp': '2016-06-06T21:18:00Z',

'Maximum': 287.74000000000001,

'Unit': 'Count'

},

{

'Timestamp': '2016-06-06T09:18:00Z',

'Maximum': 287.81,

'Unit': 'Count'

},

.

.

.

While maximum was requested for the statistic in the example above, I suspect minimum may provide a better data insight. You may experiment with various parameters in order to get the best combination.

Please note that data points being returned are not in any way sorted. You may want to import the json document into some program to go on with the analysis.

3. Determine what metrics are supported for each volume:

$ aws cloudwatch list-metrics --namespace AWS/EBS --dimensions Name=VolumeId,Value=vol-abcd1234

{

'Metrics': [

{

'Namespace': 'AWS/EBS',

'Dimensions': [

{

'Name': 'VolumeId',

'Value': 'vol-abcd1234'

}

],

'MetricName': 'VolumeQueueLength'

},

{

'Namespace': 'AWS/EBS',

'Dimensions': [

{

'Name': 'VolumeId',

'Value': 'vol-abcd1234'

}

],

'MetricName': 'VolumeReadOps'

},

.

.

.

Please make note of metric names; the wording seems different compared to graph titles from AWS Console.

4. Check Average Queue Lengths for individual volumes:

$ aws cloudwatch get-metric-statistics --metric-name VolumeQueueLength --start-time 2016-06-05T23:18:00 --end-time 2016-06-06T23:18:00 --period 3600 --namespace AWS/EBS --statistics Sum --dimensions Name=VolumeId,Value=vol-abcd1234

{

'Datapoints': [

{

'Timestamp': '2016-06-06T21:18:00Z',

'Sum': 0.064336177777777712,

'Unit': 'Count'

},

{

'Timestamp': '2016-06-06T09:18:00Z',

'Sum': 0.0065512616666666586,

'Unit': 'Count'

},

.

.

.

All operations go through the queue on their way to/from storage volumes, so this “Sum” will never be “0” at any point. What we’re interested in is to figure out for how long these requests were in queue, so small values should indicate optimal performance.

Again, please note that data points are not in any way sorted. You may have to import the json document into some program to do the analysis.

Conclusion

When working with Cloud environments, please be aware of their limitations: AWS instances do not behave like physical servers; they have various resource limits enforced by the hypervisor. You should always monitor your instance behavior through CloudWatch.

Note: This text was written by an AWS Certified Solutions Architect (Associate). Please do always work with an expert when setting up production environments.